Welcome to AI Collision,

In today’s collision between AI and our world:

It’s AI earnings season!

Y2K vs AI: someone’s O has got to go

A great interview (not by me) on AI and freedom of speech

If that’s enough to get dial-up modeming, read on…

AI Collision 💥 its big tech AI earnings SZN

This week is the start of one of the most exciting times in the financial calendars.

It’s earnings reporting season!

Ok, so for most people that’s 100% a snooze fest. But for us giga-nerds, when Big Tech companies start reporting their quarterly earnings it’s all about the late nights, earnings calls, financial data and, of course, the forward-looking commentary.

Earnings season (or “szn” as a lot of Financial Twitter (FinTwit) and Financial TikTok (FinTok) refer to it as) comes around four times a year – typically, the end of January, the end of April, end of July and end of October.

It’s fun because I enjoy figuring out which companies are going to beat earnings and which companies are going to miss.

This fun is exacerbated because a beat usually means a stock price pops higher in trading and a miss usually sees a stock price get crushed by the market.

But for the last year, there’s been a distinct thread between Big Tech companies and their quarterly earnings reporting.

It’s all driven by AI.

Amazon, Microsoft, Google, Meta, Nvidia, Apple and even Tesla are all talking very openly and bullishly about how AI is going to transform their businesses.

Some, like Nvidia, have been consistently talking about how AI is already transforming their businesses and boosting revenues, profits and earnings per share.

But it’s not all smooth sailing. The market is now starting to get over the hype-hump of AI-talk in earnings calls each quarter.

The question that’s now starting to be asked (it’s about time) is: how will these AI developments actually convert into profits?

Microsoft, for instance, announced this week that it had another strong quarter. The key driver of its growth is coming from AI integrations in the company’s cloud services.

CEO Satya Nadella said in the earnings release:

With copilots, we are making the age of AI real for people and businesses everywhere, we are rapidly infusing AI across every layer of the tech stack and for every role and business process to drive productivity gains for our customers.

These “copilots” are Microsoft’s AI developments – things like GitHub Copilot or Microsoft 360 Copilot. They are still relatively new and there’s no doubt that development and improvement will be ongoing. But it’s clear that Microsoft has been able to run with the AI baton and actually turn it into growth.

Reporting on the same day, however, was Google.

Google, too, is big on growth and it did show a considerable amount of that in the company’s release, but the thing the market wanted to see was more growth in cloud technology.

You see, AI and “cloud” go hand-in-hand. And right now, as there’s an ever-increasing demand for applications around generative AI, it’s the dominant cloud providers that are eating up the bulk of growth and profit.

The thing is that there's really two big players in the cloud: Amazon and Microsoft. Google is a distant third and has a long way to catch up.

Let me put it another way. Amazon is the most dominant cloud provider. It’s investing heavily in AI through Amazon Web Services and its fulfilment and logistics businesses. I wrote about Amazon’s robotics in Tuesday’s AI Collision if you need a refresher.

Microsoft is definitely going big with its “copilots” but the company’s free generative AI is also fantastic to use. From experience, ChatGPT (which it partly owns through its investment in OpenAI), with Microsoft’s integration of AI into Bing and Bing Image Creator, is a great user experience. You will note that a lot of our images here at AI Collision are generative AI – almost all of them come from Bing Image Generator.

This is drawing customers to Microsoft’s cloud.

And then there’s Google. It has Bard as a competitor to ChatGPT. Google is also rolling out beta versions of AI assistants through its Google Docs and other Google Cloud services.

But Google is lagging. It’s certainly not an AI leader and it’s hurting its stock price. And if the company can’t move the needle on Amazon and Microsoft in the cloud (which is hand-in-hand with AI) then it’s going to be a bumpy road ahead for the search and advertising giant.

Nonetheless, with all this action taking place and with Amazon, Apple, Nvidia (and more) still to report this earnings “szn”, it’s a fun and exciting time to be in AI and in the markets!

AI gone wild 🤪

It’s 31 December 1999.

Bruce and Diane Eckhart are preparing to bid farewell to all the technology in their lives.

According to Times reporter Joel Stein, they awoke at 4:30 am and,

“… immersed themselves in technology for what they believed was the last time, turning on their two televisions, dialling up the internet and clicking on their shortwave radio to monitor the first Y2K rollover in Kiribati.”

They even had 12 cans of Spam, which apparently Diane was prepared to eat “disaster or not.”

They weren’t alone.

In late 1999, MTV Interactive (MTV’s online division) sent a bunch of kids into a bunker underneath Times Square calling it “The Bunker Project”.

You can view the trailer for MTV’s stunt here on the WayBack Machine.

While MTV’s thing was a bit tongue-in-cheek, there were plenty of people like the Eckharts who were very afraid of what would happen when the clocks struck 00:00 on 1 January 2000.

It was known as the Y2K bug and it was a mix of hype, fear, stupidity and an early indication of the power of memes.

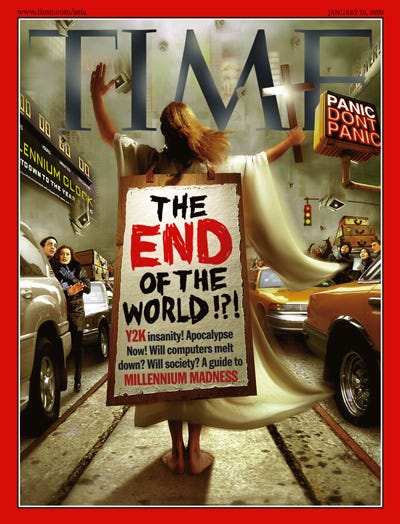

Part of the hysteria around Y2K was thanks to the media running with this ridiculous story. Here’s Time Magazine’s cover in early January 1999.

While people who actually knew how technology worked weren’t the least bothered by Y2K. But that didn’t stop the fear from spreading.

Even the US Department of Commerce published a paper titled “The Economics of Y2K and the Impact on the United States.”

This paper downplays the Y2K impact on the US a bit. It does consider foreign readiness for Y2K and the impact on the US. It also goes on to estimate that around $100 billion would be spent on ensuring Y2K readiness.

I’m not joking. It was only 23 years ago that the world and the people in power were spending billions on something that, frankly, was about as disruptive as an ashtray on a motorbike.

Today, there are weird and wonderful parallels to be drawn with how fear and uncertainty spread around AI.

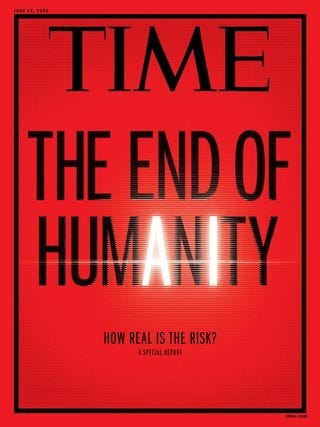

What better way to consider this than with the cover from Time Magazine, South Pacific Edition from June this year.

What created such anticipation and anxiety around Y2K was the idea of our digital world crashing down around us. A small “bug” in the system that could cripple the modern world and decades of human progress.

Also, there was a very specific date that we could countdown to, knowing that at that very second we’d either all be f***** or be fine.

Thankfully, it was the latter.

The AI revolution is a little different in that there is no fixed time. There is no countdown date to “the singularity” where it’s believed that AI systems will become more aware and conscious than humans. At least there’s no countdown to it yet.

But the fear and anxiety around it are rooted in that idea of losing control to a machine. That human progress and human invention will be the ultimate demise of humans, and a machine or machines could cripple the modern world and decades of human progress.

The underlying fear is change and control or a loss of it. While it might be the path of least resistance to think that AI is going to be the end of humanity as Y2K bunkering was the path of least resistance in 1999, the truth is that the AI threat will be as troublesome as the Y2K threat.

Boomers & Busters 💰

AI and AI-related stocks moving and shaking up the markets this week. (All performance data below over the trailing week).

Boom 📈

Appen Ltd (ASX:APX) up 1%

Intuitive Surgical (NASDAQ:ISRG) up 0.73%

C3.ai (NYSE:AI) up 0.6%

Bust 📉

Duos Technology Group (NASDAQ:DUOT) down 22%

Predictive Oncology (NASDAQ:POAI) down 19%

BigBear.ai Holdings (NASDAQ:BBAI) down 7%

From the hive mind 🧠

Artificial Pollteligence 🗳️ The Results Show

Thanks to ongoing poll glitches we published another poll on Tuesday. This time the question was,

Will more human-like AI lead to,

Bigger corporate profits

Safer, abundant AI

The end of humanity

As usual…the results…are…in…

And the winner is…

Bigger corporate profits!

Well, I’m quite glad that “the end of humanity” won that round.

What this tells me is that smart people realise that better AI is actually unlikely to be the existential threat that it’s made out to be.

All you have to do is think about how society has been able to handle revolutionary technologies in the past to see that we might create wonderful things, then terrify ourselves about those wonderful things, but ultimately these wonderful things just end up being wonderful things and helping level up society.

I don’t see AI being any different.

Now, because we’re still a bit off-kilter with our polls, I wanted to run something past you. This has nothing to do with AI this time around. In fact, it’s a bit of a survey.

We typically send AI Collision to you at 9am Tuesdays and Thursdays. I have no idea if that’s a good time or not.

But I was thinking about it and came to the conclusion that I’d probably want to read AI Collision on my commute to work (if I had a commute to work) but I don’t know if that’s what you prefer.

So here’s this week’s poll…

Weirdest AI image of the day

Lego sets based on unexpected movie licences – r/Weirddalle

ChatGPT’s random quote of the day

"Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks." – Stephen Hawking

Thanks for reading see you next Tuesday, and don’t forget to leave comments and questions below,

I disagree on your points regarding the likely 'non-problem' that will be (is) AI. Granted AI is no new phenomena, it has existed since Pong (what a game!) and arguably before in various guises.

I also accept that most people are scared of losing control to a machine and that this unto itself is not the largest concern and is, empirically speaking, misplaced fear.

However I think for the more discerning individuals out there, that truly understand the tech-human fusion, the biggest problem is losing control to the humans that control the AI and who will ultimately misuse it.

As power concentrates in the hands of the few (companies, individuals, dynasties etc) we are increasingly at the mercy of those controlling the flow of information. History is being rewritten infront of our eyes. As each technological step forward occurs, its benefits will quickly fade as the technology is co-opted and subsumed into the belly of the larger corporate or big-government beast.

We are doomed not because of Terminator, but becuase of the power controlling a 'Terminator' (automated food dispensing, 'intelligent redistrubution' of our funds etc) and the inherent bias that will exist in the code.

Open Source AI carries far fewer risks to inherent misuse, however I cannot see people putting up with the inconveniences that often come with OS. Most will, lazily but understandably, far rather an integrated and convenient 'it just works' route than something that requires their time and effort to understand - an "Apple of AI" if you will. And that will be the significant issue with AI.

AI will be our downfall, if something else doesn't beat it there first, but it will as ever be human error and not AI that is at the core of our demise.